Several universities have told us that they have had what sounds like the same Freedom of Information (FOI) request from a national newspaper. Most of the data requested are easy to extract using Talis Decisions but one area is statistics on historical overdues. Data on current Overdue are easy to extract using Talis Decisions, but the need to extract detailed data on historical Overdues was not envisaged. This raises some interesting issues of principle:

- How should permanently lost items be accounted for? Without some thought here, the statistics are likely to reflect items most commonly lost or stolen rather than those which are eventually returned.

- Should the data be at the level of individual item or barcode (e.g. this particular instance was most commonly overdue), at the level of an individual catalogue record (Bib ID) or even at the level of all editions (e.g. all editions of “Macbeth”)

- Should all types of Loan and all types of Item be included? What about home delivery, books on prescription and all those edge cases?

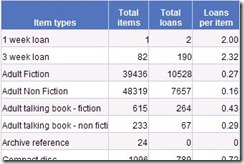

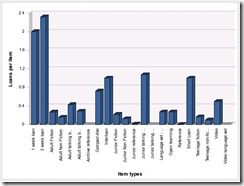

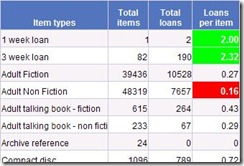

Illustrated below is one example of a possible approach to this problem. The assumptions made are that:

- Data should be accumulated at the level of Control Number (i.e. catalogue record)

- Overdues above a particular threshold should be excluded

- Certain item types should be excluded

The queries

There are many ways to organise this. In the example report created for this, I reported on Fines as well and used four queries, merging the results . Only two are needed for the Overdue report. These are discussed below

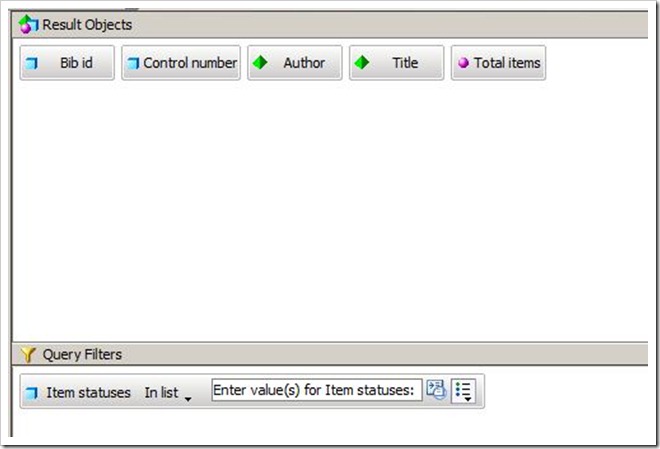

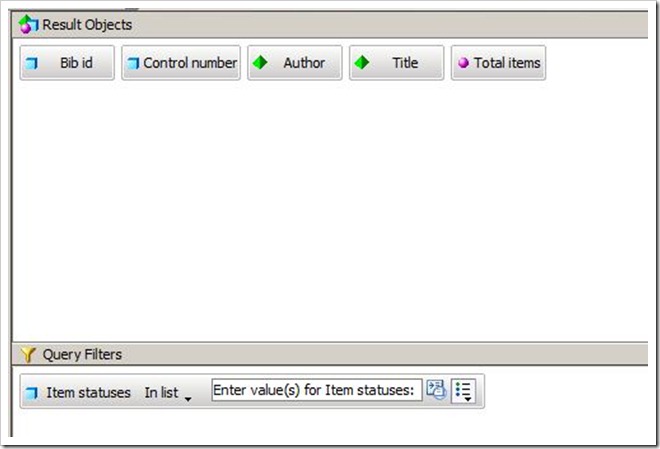

Bib Data

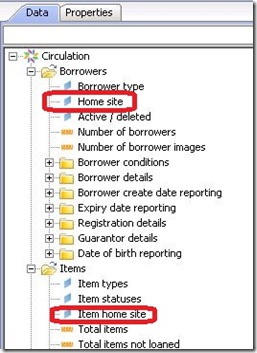

This query brings back the Bibliographic Data Author and Title for the items that you want to include:

The list is limited by Item Status as a prompt, in part to reduce query execution time by (for example) excluding redundant items.

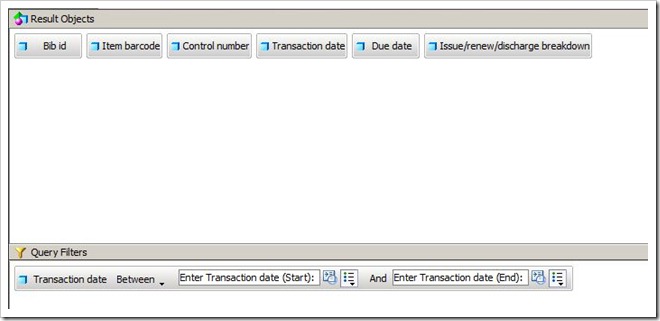

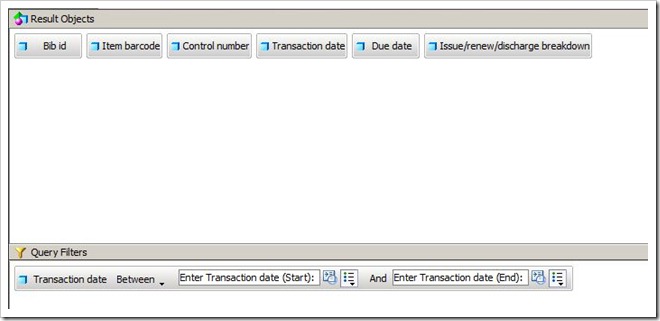

Overdue Items

This is the most complex query. It brings back the Transaction Date and the Due Date by Item Barcode. These data are used to calculate aggregate overdues:

Note the prompt limits on Transaction date. Without limits on the date range, this query could run for a very long time. A further filter could be used to bring back only discharge transactions.

As it stands, this query only brings back current overdues. The generated SQL must be modified to bring back all overdues. To do this, proceed as follows:

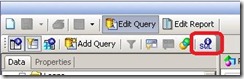

Click on the SQL button on the control bar in this query:

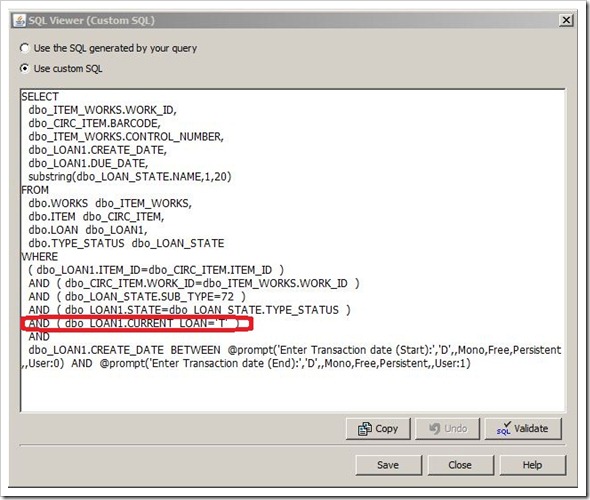

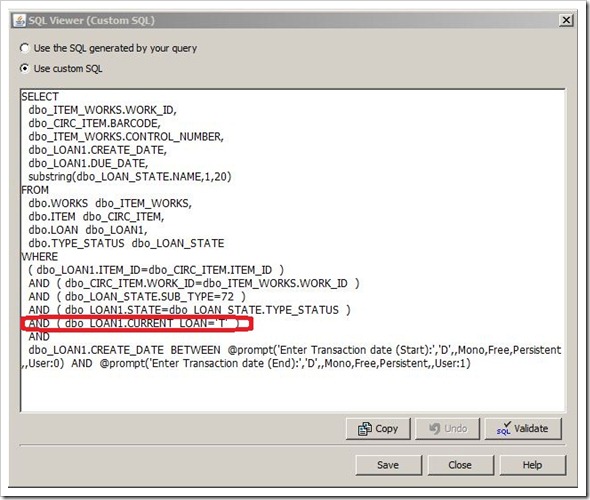

This will open up a window which allows you to modify the SQL. Delete the line ringed in red:

NB: if you make any changes to this query (including filters) the SQL is regenerated and this step must be repeated

Once these queries are produced the report can be run

Variables

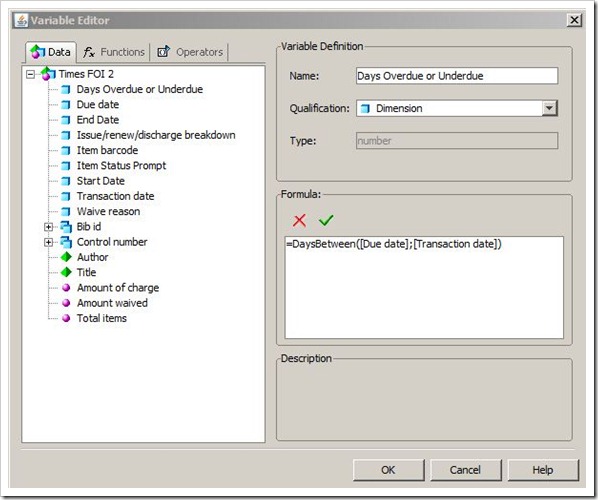

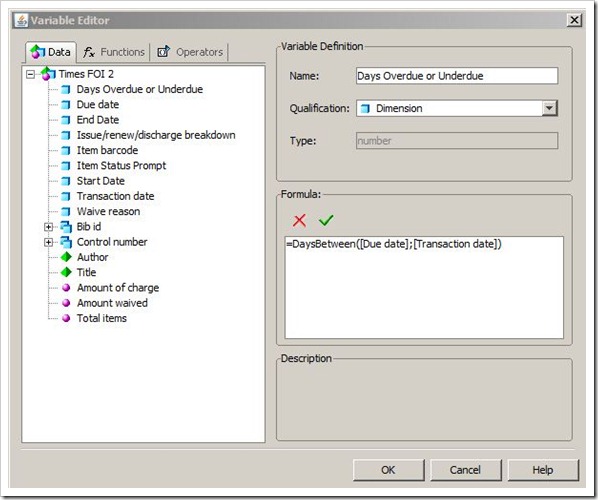

To transform the raw dates into an elapsed overdue days, I used a variable thus:

The Formula used is: =DaysBetween([Due date];[Transaction date]). This calculates the number of days between the due date and transaction date for each item.

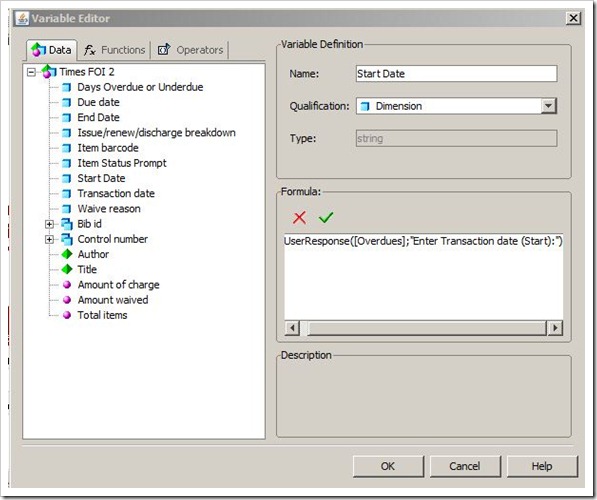

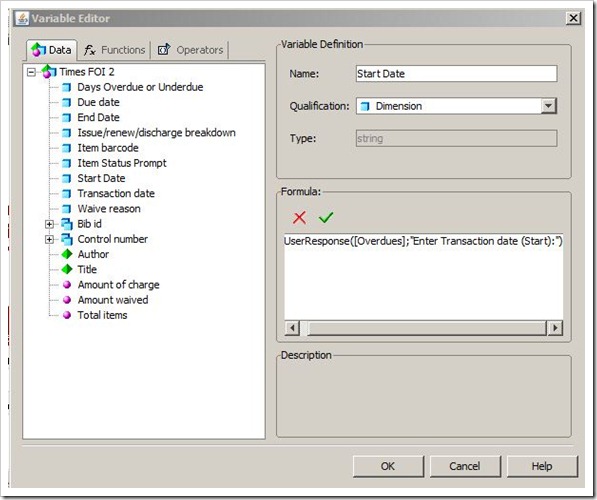

I also used variables to calculate and display parameters. Here is an example for a variable I called End Date (this displays the transaction end date used in the prompts in the most recent refresh of the report:

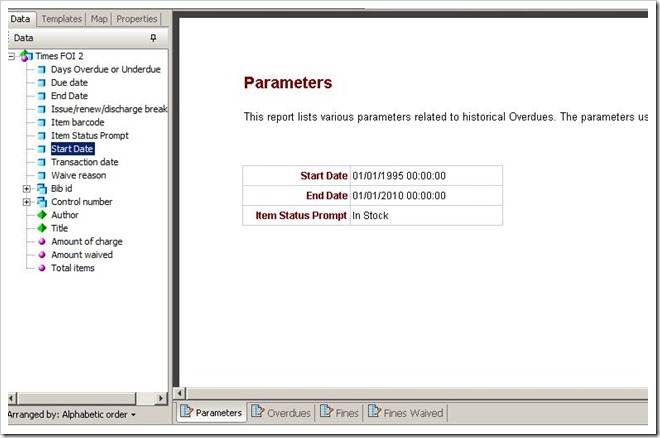

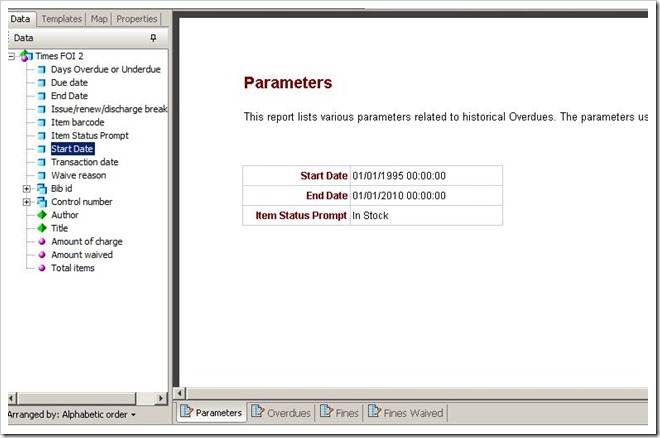

This uses the UserResponse function. Note that you may need to include the optional Query Name (“Overdues”) in this example if you are using the same prompt text in multiple queries as described earlier. This allows you to create a report page which documents the parameters used in the report thus:

Reports

This should give you the data that are needed to create the reports that you need. Two other ideas might be helpful:

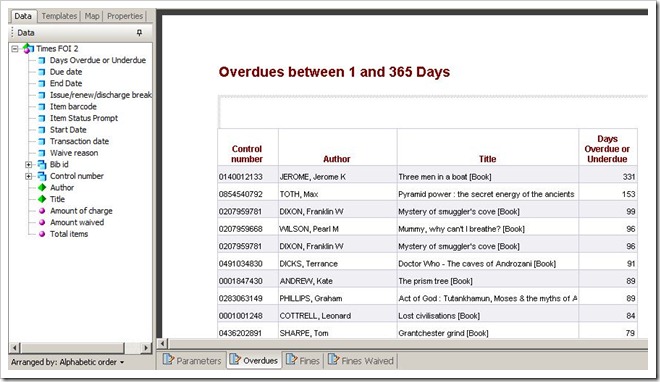

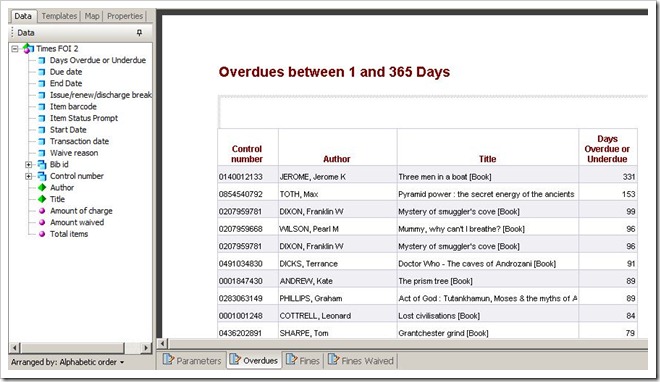

- You can use a Report filter (as opposed to a Query filter) to restrict the Days Overdue or Underdue to a range (say 1 – 365) to filter out those where the item has been returned before the due date and also those that will almost certainly never be returned.

- If you right click the Days Overdue or Underdue column in a report you can sort it descending

These features are illustrated in this sample report:

In Conclusion

If this has been helpful, please email me directly, or comment below. If you would like a BIAR file containing an example report, please email me.

There are many on line survey tools, some of them free; Survey Monkey is one example which we have used ourselves in the past. If your organisation has a CRM (Customer Relationship Management) system then it may have built in survey facilities. Even Google Docs allows you to create forms and accumulate the responses in a Google Docs spreadsheet. For many purposes this would be quite adequate.

There are many on line survey tools, some of them free; Survey Monkey is one example which we have used ourselves in the past. If your organisation has a CRM (Customer Relationship Management) system then it may have built in survey facilities. Even Google Docs allows you to create forms and accumulate the responses in a Google Docs spreadsheet. For many purposes this would be quite adequate.

Recent Comments